安全分析工程师(71SRC) – 北京

岗位职责:

实施Web/移动APP、内外网主机和应用安全测试,输出测试报告和修复建议,跟进业务修复

安全事件应急响应,及时分析、验证、报告、溯源外部威胁和情报

跟进最新安全漏洞,驱动业务团队修复,协助验证

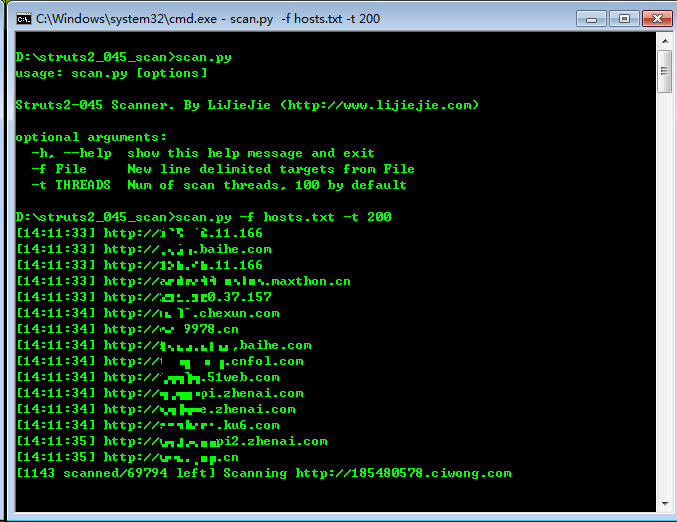

开发和增强现有的扫描系统和应急响应工具链

任职要求:

本科及以上学历

像黑客一样思考, 参加过CTF的优先, 在各SRC提交过漏洞的优先

掌握Python和Linux Shell

熟悉OWASP TOP10, 熟悉Android IOS安全机制的优先

熟悉汇编、反编译、脱壳、Hook和加解密技术更佳,能熟练使用IDA等逆向分析工具更佳

安全服务开发工程师-北京

岗位职责:

开发和维护内部安全服务: 堡垒机、 SIEM、 入侵检测、 蜜罐、态势感知服务、抗D服务

负责海量安全样本数据的提取,分析,挖掘,和总结

任职要求:

计算机和信息安全相关专业,本科及以上学历,有安全专业背景更佳

熟练Java或Python及常用 Web 开发框架的优先

熟悉Linux下的C程序开发者优先

熟悉 Linux 操作系统、 TCP/IP网络协议,熟悉网络包处理

有安全项目经验优先

薪资福利

薪资:20-40K

福利:

舒适的工作环境, 免费茶饮咖啡, 12天带薪休假

免费体检, 五险一金, 补充医疗、子女补充医疗, 人身意外伤害险

定期团建活动, 每月生日party, 各类员工活动俱乐部. 丰富的年节活动, 午餐补助、交通补助

内部优惠, 结婚生育礼金, 创意基金, 完善的晋升机制, 各类前沿培训课程

投递邮箱: lijiejie[at]qiyi.com